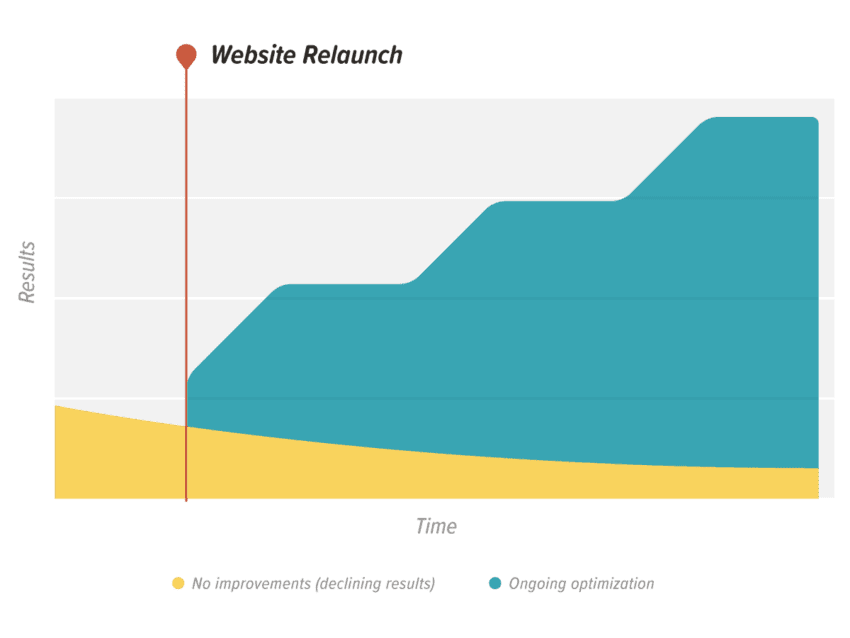

Improved search engine placement means increased profitability

Improved search engine placement for your business means increased profitability from your website. That’s one of the key performance indicators (KPIs) that illustrates our website optimization services are working effectively for you. To that end, we track and measure hundreds of metrics to gain insights into what’s working and what’s not.

Within six months after starting your search engine optimization engagement, you will have experienced cumulative gains in the KPIs that matter most… primarily revenue from organic search. These gains, viewed as a long-term, semi-permanent rise in your website’s value should represent a complete return on investment of Orbit’s SEO work.

If for some reason your search engine placement isn’t where we want it to be — due to external forces, market conditions, outside collaborators, etc. — we take the time to review and plot the positive trajectory of how soon it will occur.